The Best Fluffy Pancakes recipe you will fall in love with. Full of tips and tricks to help you make the best pancakes.

What Is Parallel Computing

- In the simplest sense, parallel computing is the simultaneous use of multiple computes resources to solve a computational problem:

- A problem is broken into discrete parts that can be solved concurrently

- Each part is further broken down into a series of instructions

- Instructions from each part execute simultaneously on different processors

- An overall control/coordination mechanism is employed

Parallel Computers

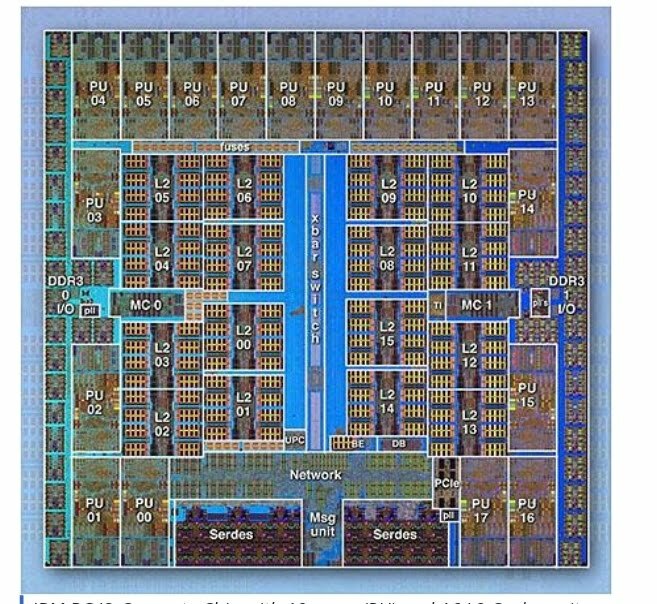

Virtually all stand-alone computers today are parallel from a hardware perspective:

- Multiple functional units (L1 cache, L2 cache, branch, prefetch, decode, floating-point, graphics processing (GPU), integer, etc.)

- Multiples execution units/cores

- Multiple hardware threads

- Networks connect multiple stand-alone computers (nodes) to make larger parallel computer clusters

- The majority of the world’s large parallel computers (supercomputers) are clusters of hardware produced by a handful of (mostly) well-known vendors.

Types of Parallelism

- Bit-level parallelism –It is the form of parallel computing which is based on the increasing processor’s size. It reduces the number of instructions that the system must execute in order to perform a task on large-sized data. Example: Consider a scenario where an 8-bit processor must compute the sum of two 16-bit integers. It must first sum up the 8 lower-order bits, then add the 8 higher-order bits, thus requiring two instructions to perform the operation. A 16-bit processor can perform the operation with just one instruction.

- Instruction-level parallelism –A processor can only address less than one instruction for each clock cycle phase. These instructions can be re-ordered and grouped which are later on executed concurrently without affecting the result of the program. This is called instruction-level parallelism.

- Task Parallelism –Task parallelism employs the decomposition of a task into subtasks and then allocating each of the subtasks for execution. The processors perform the execution of sub-tasks concurrently.

- Data-level parallelism (DLP) –Instructions from a single stream operate concurrently on several data – Limited by non-regular data manipulation patterns and by memory bandwidth

Why Use Parallel Computing?

- In the natural world, many complexes, interrelated events are happening at the same time, yet within a temporal sequence.

- Compared to serial computing, parallel computing is much better suited for modeling, simulating, and understanding complex, real-world phenomena.

- For example, imagine modeling galaxy formation, planetary Moment, Climate change, etc

Main Reasons for Using Parallel Programming

SAVE TIME AND/OR MONEY

- In theory, throwing more resources at a task will shorten its time to completion, with potential cost savings.

- Parallel computers can be built from cheap, commodity components.

SOLVE LARGER / MORE COMPLEX PROBLEMS

- Many problems are so large and/or complex that it is impractical or impossible to solve them using a serial program, especially given limited computer memory.

- Example: “Grand Challenge Problems” (en.wikipedia.org/wiki/Grand_Challenge) requiring petaflops and petabytes of computing resources.

- Example: Web search engines/databases processing millions of transactions every second

The biological brain is a massively parallel computer

In the early 1970s, at the MIT Computer Science and Artificial Intelligence Laboratory, Marvin Minsky and Seymour Papert started developing the Society of Mind theory, which views the biological brain as a massively parallel computer. In 1986, Minsky published The Society of Mind, which claims that the “mind is formed from many little agents, each mindless by itself”.The theory attempts to explain how what we call intelligence could be a product of the interaction of non-intelligent parts. Minsky says that the biggest source of ideas about the theory came from his work in trying to create a machine that uses a robotic arm, a video camera, and a computer to build with children’s blocks.

The Future Of Parallel Computing

- During the past 20+ years, the trends indicated by ever faster networks, distributed systems, and multi-processor computer architectures (even at the desktop level) clearly show that parallelism is the future of computing.

- In this same time period, there has been a greater than 500,000x increase in supercomputer performance, with no end currently in sight.

- I would like to share with your all how intel is Using Parallel Computing

https://www.intel.com/pressroom/kits/upcrc/ParallelComputing_backgrounder.pdf

https://www.thecyberdelta.com/corona-virus-impact-on-cyber-crime/amp/